Ethical data collection with AI and what to expect in the future

17 September 2025

Last updated: December 2025

ChatGPT and similar NLP-driven models are reshaping the future of information gathering. Businesses are always in need of more real-time data and pipelines. According to IDC (International Data Corporation), every four years the volume of data roughly doubles — and AI takes it even further.

Rapid growth means that some businesses may overlook ethical practices in scraping and obtaining web data with AI, and that's detrimental for their long-term development. Trusted infrastructures, like Astro, provide whitelisted proxies (you can buy them after a free trial available through the Astro Support Team) for AI data gathering starting from $3.65 per 1 GB. Independent review platforms, such as dieg.info, assist in selecting the best proxy providers on the market.

Why use AI in data gathering?

Data collection is getting more costly. As automated tools improve, it’s becoming increasingly challenging to extract data from HTML sources:

- Website layout changes can break parsers and selectors.

- CAPTCHAs and browser fingerprinting disrupt workflows.

- Teams spend more time fixing scrapers than innovating.

- Dynamic content in JavaScript-heavy web pages requires complex rendering.

How can AI help with data collection?

Generative AI models can adjust data collection techniques based on prompts — sets of instructions in human language. It's a complex but highly configurable process:

| Area | Usage | Example |

| Adaptive extraction | AI identifies key fields even if the HTML structure changes | An e-commerce site updates its layout, but the AI still correctly extracts product names, prices, and availability |

| Self-correction | AI checks if extracted data is inconsistent and adjusts extraction rules | A travel website switches from tables to cards; the AI updates parsing rules automatically to continue collecting flight times |

| Semantic understanding | AI interprets context and relationships between data elements | On a marketplace, AI distinguishes between “base product” and “bundle deal” and categorizes them properly |

| Anomaly detection | AI detects unusual patterns and inconsistencies in harvested data | A retailer’s product feed suddenly shows a 90% price drop across categories; the AI flags this as a probable data error |

| Automated scheduling | AI evaluates crawling frequency is optimal and adjusts accordingly | News portals update hourly, while blogs change weekly; AI learns these rhythms and adapts scraping intervals. |

3 trends for data gathering with AI in 2026

AI will guide future developments in data gathering, according to Scott Vahey, Director of Technology at Ficstar. Three major trends are emerging:

- AI vs. AI: The competition between automated scraping systems and site-level AI defenses will intensify. Both sides will implement intelligent mechanisms.

- From Big Data to smart insights: Collecting large volumes of information is just a first step; what's really important is how rapidly organizations transform raw data into actionable insights.

- Price as a priority: The use of AI data extraction for dynamic pricing, market analysis, and tracking consumer demand will grow, helping businesses stay ahead of competitors.

McKinsey Quarterly highlights another trend: videos, pictures, and chats as examples of unstructured information will be gathered now alongside traditional data with AI-powered tools.

Why use ethical proxies in AI-assisted data extraction?

Despite AI's advantages, checking and regulating large volumes of information is a challenging task. Proxies complicate it even further, as providers must adhere to KYC, AML and other ethical compliance policies:

- Speed and scale: AI scrapers generate thousands of requests per second. While efficient, they amplify compliance mistakes as well.

- Autonomy: “Self-correction” systems might circumvent protections unintentionally (e.g., solving CAPTCHAs that shouldn’t be solved under the current compliance conditions).

- Jurisdictional issues: Regulations like GDPR and CCPA impose stricter controls on how data is collected and processed.

To stay safe, businesses should manage AI implementations accordingly. Astro offers transparent operational techniques with detailed logs and statistics on every IP address.

Dieg.info helps you choose best proxies for data gathering

AI in data collection is a promising concept, but it should be used with ethical guidelines in mind. Buying residential and mobile proxies is a number one priority, and dieg.info provides expert guidance in this area.

Dieg.info offers ratings and in-depth reviews done by IT experts on specific hosting services, such as Astro. It also serves as an industry overview and news platform, where readers can track market leaders, follow developments in the proxy and hosting ecosystem. Readers can freely contribute and leave their own reviews.

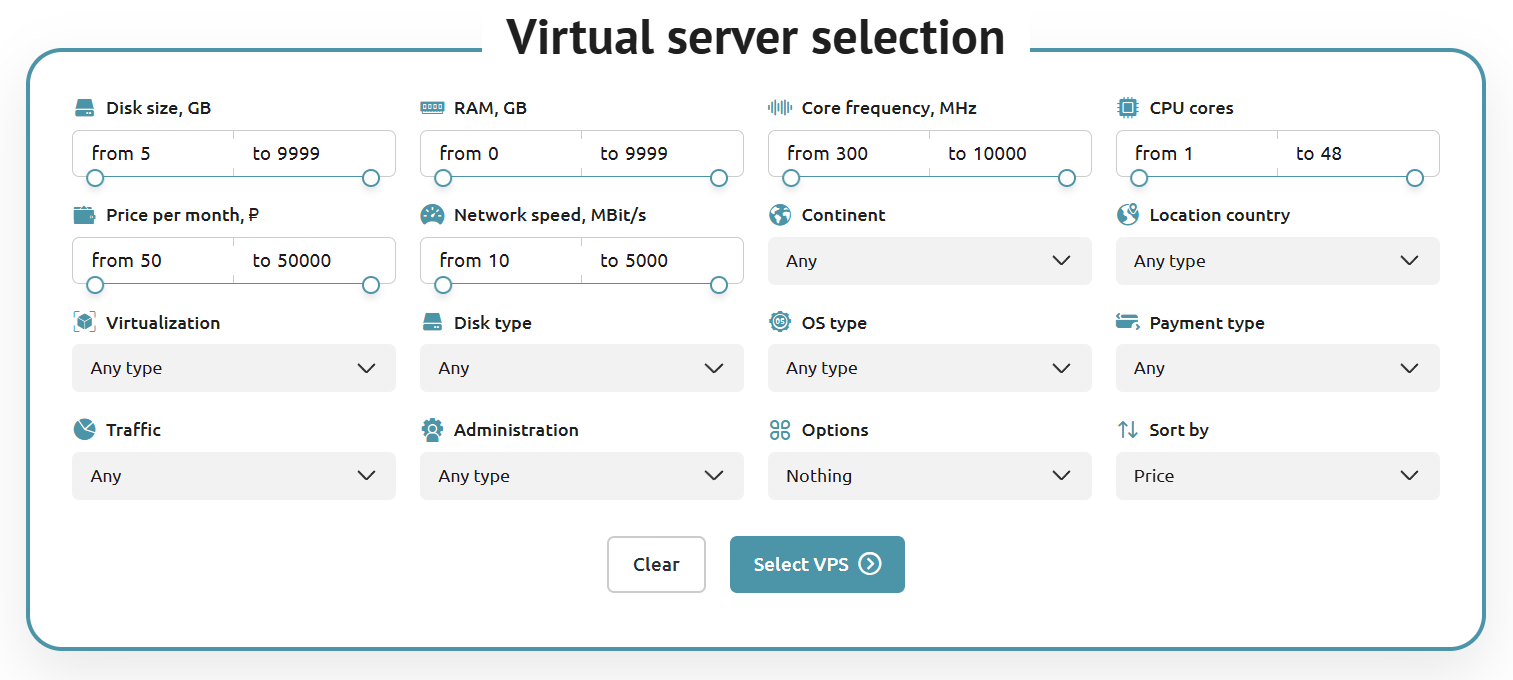

The Virtual Server Selection Tool on diegfinder.com helps users define their needs across various categories. It takes into account the most important criteria, such as server speed, reliability, pricing, and quality of technical support. Combined with market intelligence from dieg.info, this helps users make data-driven decisions.

Related questions

-

Data protection involves multiple layers: sourcing proxies transparently (with KYC verification), encrypting sensitive information, respecting robots.txt and rate limits, and maintaining logs to audit how AI systems access and process data.

-

The seven principles include human agency and oversight; technical robustness and safety; privacy and data governance; transparency; diversity, non-discrimination and fairness; societal and environmental well-being and accountability.

-

Transparency is a major factor in the future of AI ethics because it directly impacts trust and fairness in AI systems. Transparent AI systems allow users to understand how decisions are made, reducing fear. When AI systems are transparent, tracing errors in specific data is easier.